The internet is changing rapidly; it’s a place for new opportunities, but also dangerous pitfalls. From the advent of Artificial Intelligence (AI) to the prevalence of data breaches, individuals and businesses alike are becoming increasingly aware of the risks that come with living in a digital-first world. It’s an issue that experts have studied extensively over the years, and one that Pew Research study is addressing again with its latest report, ‘How Americans View Data Privacy‘. Created using survey data from 5,101 US adults between May 15th and 21st of 2023, this report provides an in-depth look into the American public’s attitudes and experiences about data privacy while highlighting how they’ve changed in recent years. See our summary of the key findings and takeaways below.

Deciding Who to Trust

Americans’ distrust in the current system manifests greatest in tech CEOs. It doesn’t take extensive research to find out that most people don’t like Mark Zuckerberg given the amount of scandals his company Meta has faced over the years. But then there’s also Adenna Friedman, Dan Schulman, and although he’s technically not CEO of Twitter/X anymore, Elon Musk. The public resents these individuals for the extreme power they wield and the massive wealth they hold and in the cases of Zuckerberg and Musk, the less-than-rosy track records they have of protecting and respecting their users’ privacy.

According to Pew Research’s latest survey, roughly 77% of Americans state that they have little to no confidence in leaders of social media platforms’ ability to publicly admit to and take responsibility for data misuse. That feeling is understandable given the sheer number of times CEOs have failed to be transparent and forthcoming about their companies’ wrongdoings.

The most recognizable incident of them all dates back to 2014, when Facebook was accused of allowing Cambridge Analytica to access the personal data of over 87 million users without their permission. This was revealed by whistleblower Christopher Wylie in March 2018 and is still under investigation to this day.

In addition to personal data, Cambridge Analytica is also accused of using the information provided by Facebook to target political ideologies to influence the outcome of elections. This specific breach of trust led Facebook’s CEO Mark Zuckerberg to testify before the United States Senate in April 2018. It was the first time Facebook had ever been asked to testify publicly and it resulted in a significant shift of public opinion regarding the social media giant.

That’s far from the end of the rap sheet, though. Companies like Meta, its subsidiary platforms Instagram and WhatsApp, and competing apps have been embroiled in tens, if not hundreds of scandals since the early 2000s. While the law usually catches up, few people consider it enough to address the problem.

Privacy Is the Best Policy

Privacy policies are a good example of the tangible change that past data privacy incidents have caused. These agreements exist to inform individuals about tech platforms’ practices concerning data collection, processing, and usage. They can be considered a symbol of transparency in the otherwise distrustful user-company relationship – an upfront disclosure that hopefully proves there’s nothing to hide.

While data privacy policies are considered best practice to begin with, laws like the European Union’s General Data Protection Regulation (GDPR) have begun making them mandatory. The GDPR not only sets a high bar for data privacy protection but also brings huge fines for companies that violate it.

Tech leaders are expected to take responsibility for the data they collect and use. This is why many companies have gone beyond policy-making, investing in tools and technologies that proactively keep data secure.

But for the average consumer, it’s still not enough. A majority (61%) of people surveyed in Pew’s study say they’re largely skeptical that privacy policies actually fulfill their purpose. Ask anyone on the street – or 69% of individuals in the study – and they’ll tell you that privacy policies are just another thing to tap past during the process of setting up a social media account. 61% of respondents attributed their lack of attention to the fact that many privacy disclosures are written in ‘legalese’ and therefore hard to understand. Other common reasons someone might skim through include policy length, vague terms and conditions, or even disbelief that a social platform will enforce its own rules.

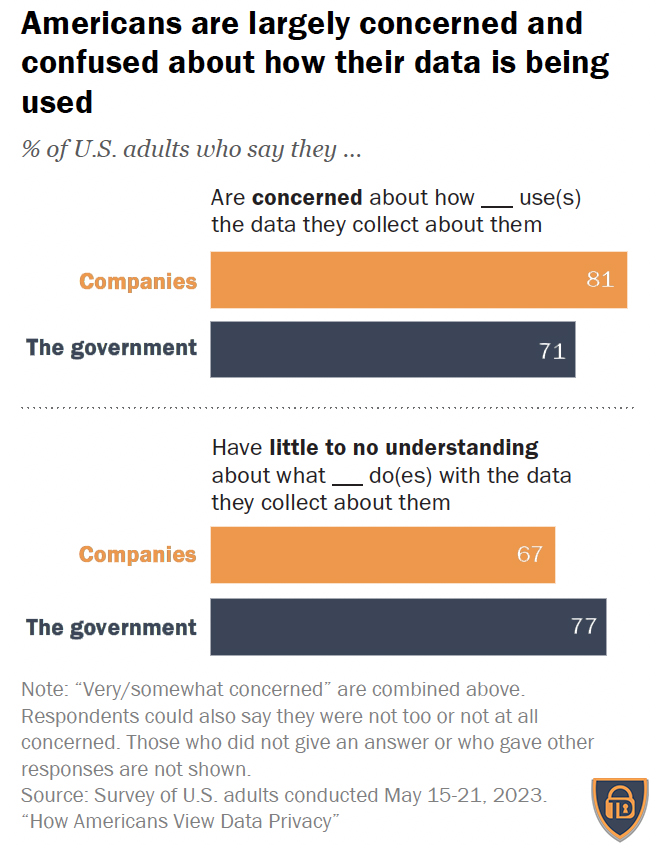

Whatever an individual’s specific reason may be, Pew says that 56% of Americans will click the ‘Agree’ button without actually reading a privacy policy. The policies themselves might not be enough, either, given that just over two-thirds (67%) of Americans apparently understand either nothing or very little about what companies do with their personal information.

The Kids Aren’t Alright

The spread of world-changing technologies we’ve seen over the past few years has been impactful to everyone, but if there’s one demographic that’s been most worryingly hit, it’s youth. Children and teenagers now find themselves growing up in a world where past concepts of science fiction are a reality. As adults struggle to learn how and where to use powerful tools like AI to their advantage, kids have a front-row seat to everything. Many also have the freedom to explore new technologies for themselves as soon as they go mainstream – replacing a mother’s worry that her child plays video games for too long with one of what a chatbot might suggest as a fun activity.

Despite the pervasiveness and scale of this issue, a majority (85%) of the American public still believes it is parents’ responsibility to protect their children from all there is online nowadays. That’s fair considering the first-hand ability parents and guardians have to mitigate and teach kids about the technology they’re being exposed to. However, experts emphasize that it’s not a task they should be doing alone. Policing technology use nowadays goes far beyond unplugging the PlayStation after 8 PM; with smartphones and laptops everywhere – even at school – completely monitoring a child’s online activity is next to impossible. There are also things that parents can’t see, such as companies’ use of minors’ personal data. Even with the best of intentions, it’s safe to say that a regular mom or dad cannot protect their child from all potential threats.

With this in mind, 59% of respondents in Pew’s study say major tech platforms have an important role to play in mitigating young people’s online risk. It all ties back to the policies that these companies have in place to protect minors from inappropriate content, cyberbullying, or other online hazards. It’s also a matter of additional diligence; Facebook and Instagram need not only respect everyone’s privacy but also be mindful of the age of their users. There’s a growing call for major social media apps to introduce age verification processes, which some are already implementing alongside other protections.

The government is another potential actor in the picture. 46% of people surveyed by Pew think it should be held responsible for regulating social media sites and services to protect young people. While certain policies are already in place – like the Children’s Online Privacy Protection Act (COPPA) – more can be done to ensure that youth access and use technology safely. This includes developing collaborative approaches between parents, schools, and tech companies to create better online safety resources. Local law enforcement can also be proactive in monitoring and addressing tech-related crimes against minors, such as cyberbullying and online harassment.

Regardless of whose primary responsibility it is to protect kids from the hazards of the online world, one thing most (89%) people can agree on is that social media platforms know too much about children and their behavior.

This is especially concerning given the increasing number of children using social media sites (in the United States alone, 72% of teens and 48% of children aged 8-12 use some type of social media). Companies may tap into the behaviors and preferences of these young users for everything from targeting ads to creating new products and services to selling data to third parties.

What’s more, a common concern among parents is that the long-term implications of these activities are still largely unknown. We don’t yet have a clear understanding of how social media is shaping children’s perceptions, beliefs, and behaviors.

Putting the Right Safeguards In Place

While the American government has trouble agreeing on anything nowadays, data privacy thankfully doesn’t seem to be politicized just yet. Lawmakers from both major parties are expressing concerns over

the lack of privacy protections for consumers and businesses, and are working together to craft solutions.

The only problem is that a will in Congress for change is merely the first step in actually creating it. The US government is notorious for being slow to act on a range of issues, from public safety to social security. It’s even had trouble keeping itself operational as of late – meaning things like tech regulation are likely to fall on the back burner. This is concerning given the rapid pace of development in technology, especially as it pertains to Artificial Intelligence. It’s grown in capability and popularity so quickly that most lawmakers don’t know where to start with regulations. A good chunk of Congress doesn’t understand what it’s regulating, just as many consumers use tools like ChatGPT without a full awareness of how large language models process their personal information.

It’s also hard to predict the future; even with a speedy legislative process, there’s no guarantee that regulations and laws stay relevant. We simply don’t know what we don’t know concerning the potential ramifications of AI in the future. Will it lead to job losses? Will it be a net positive or negative for society?

The best the American government seems to be able to do right now is establish its position that citizens’ privacy must come first. Congress has yet to pass any significant enforceable laws on Artificial Intelligence, but the Biden White House took some action by publishing a proposed Blueprint for an AI Bill of Rights in late 2022. Sam Altman, the CEO of Open AI, also testified before a Senate Subcommittee this year, urging the government to take a leadership role in regulating AI. His position surprised many as tech leaders are often the last people who want rules imposed on their businesses. It’s in line with 72% of the people Pew surveyed in its study; a majority of Americans want to see more regulations on the use of Artificial Intelligence, while only seven percent believe there should be less. This split is also relatively partisan, with 68% of Republicans and 78% of Democrats taking a stance in favor of more regulation.

The Political Perspective of Data Privacy

It’s worth noting that political and ideological views may have something to do with Americans’ greater perception of privacy. Pew Research’s study illustrates a noticeable difference between party lines; Republicans (77%) are more likely than Democrats (65%) to say they’re hesitant to trust in the government’s use of their personal data. This could be related to views on the government as a whole, or more specific opinions on data privacy laws.

Of course, individual views on data use go beyond political differences. Age and gender have an impact too. One study published by the National Institutes of Health shows younger people are generally less concerned about the government using their information than older Americans, while another piece of Pew research claims women are more likely to read a privacy policy before agreeing to it.

Across the board, it’s safe to say that everyone has become more concerned. Pew’s data shows that 71% of Americans now express worry over the government’s use of people’s data, up from 64% in 2019. Meanwhile, 79% of people believe they have no say in what companies or the government do with their information.

New technologies have the potential to bring us incredible opportunities—but they also pose a risk to data privacy. It’s more important than ever for individuals and businesses alike to understand the implications of their online activities, as well as how their data is being used and protected. The latest Pew Research study provides valuable insight into the attitudes and experiences of the American public around data privacy—highlighting how far we’ve come in recent years, as well as where there is still work to be done.

In our fast-paced digital world, the promises of technological advancement often raise concerns about data protection. TeraDact, a leading advocate in data security, underscores the urgency of understanding and fortifying our online activities. Our expertise illuminates the critical need to prioritize data security in today’s evolving digital landscape.

The recent Pew Research study provides invaluable insights into the American perspective on data privacy. While it signals progress in awareness, it also underscores the need for further action. As we embrace emerging technologies, it’s pivotal to remain vigilant about data protection, advocating for clear guidelines, responsible usage, and robust security measures.

TeraDact’s commitment to data privacy resonates deeply in this landscape. At TeraDact, we plan to continue the mission of educating and empowering individuals and businesses about data security. By collaborating with experts like us, we can collectively champion a future where innovation thrives hand in hand with uncompromised data privacy solutions. Check out our suite of products today.